Image credit: Unsplash

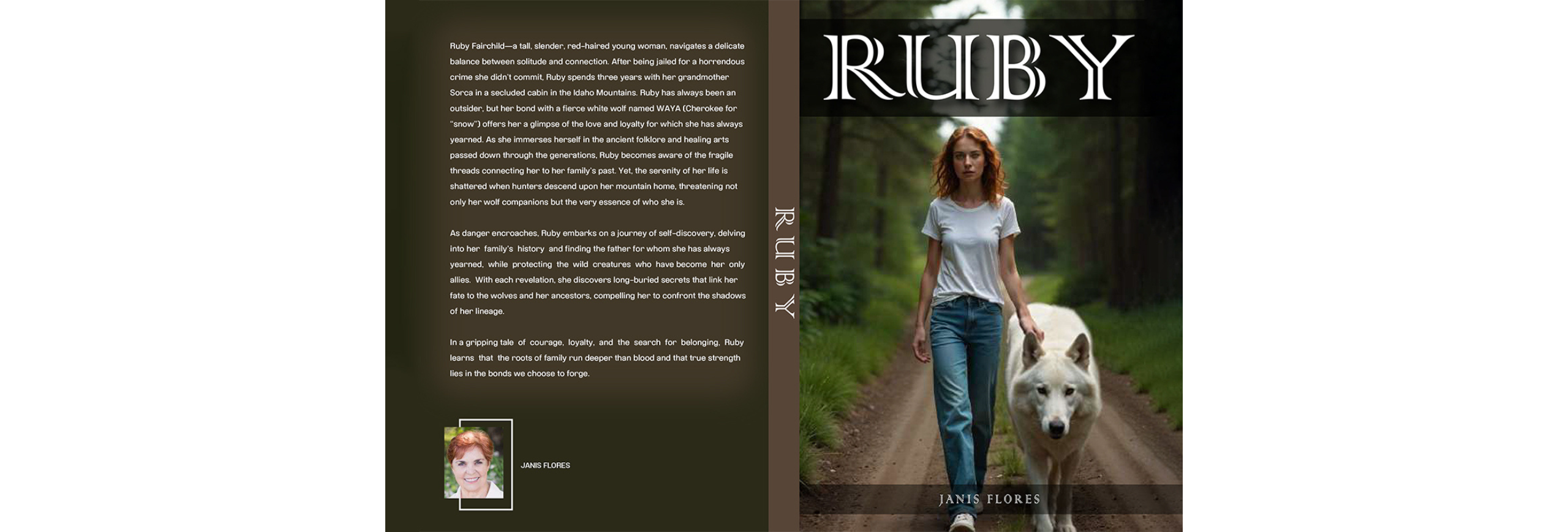

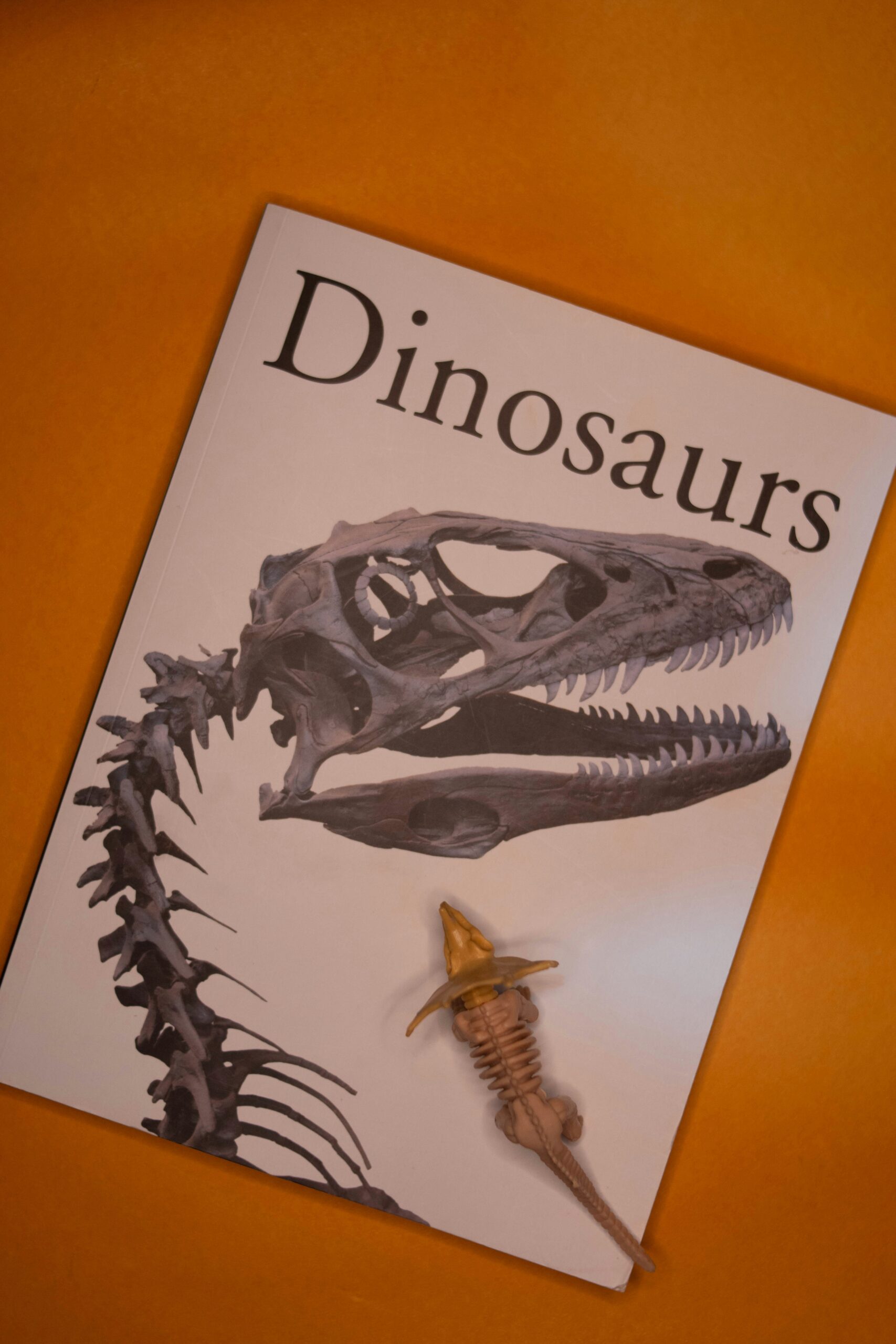

The “Swifties for Trump” AI images shared by Former President Donald Trump on his Truth Social account may have done more than upset Swifties around the country. With technology evolving at a rapid rate, quantifying the harmful effects AI may have on humans is challenging, but protecting yourself from being fooled by this new technology is still important.

AI has the potential to transform your daily life and advance humankind on a scale not seen since the Industrial Revolution. Yet, it can also create numerous ethical and legal problems. With the advancing technology now intersecting with both politics and popular culture, as highlighted by Trump’s AI-Swift images that falsely suggested the global popstar endorsed him, AI’s growing influence on shaping public perceptions and political discourse cannot be denied.

While AI arguably cannot beat your interpersonal skills, it is still possible to get “beaten” regardless of how strong your interpersonal skills are. One of the easiest pathways where AI can do this is on social media.

Social media, and anything else you could possibly click on the internet, has the potential to influence you—and is often trying to. The goal may not always be to get you to sign up for something or buy a product; sometimes AI is trying to influence you in other ways (like Trump’s AI images of Swift aimed to do). When social media platforms like YouTube, TikTok, and Instagram rely on paid ads, AI is used to target you with algorithms that tailor ads to your interests, using your data to elicit emotions and influence you.

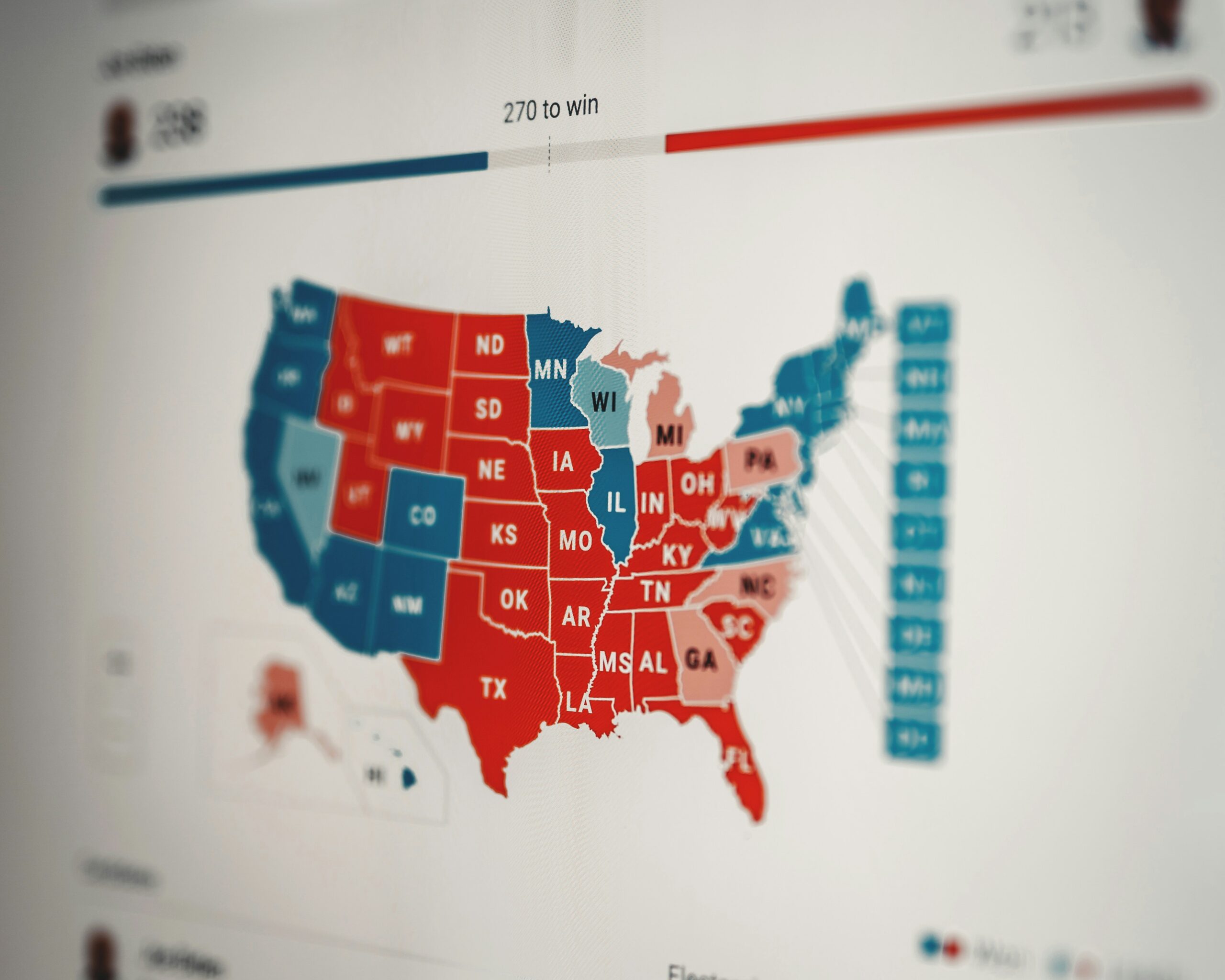

AI’s influence raises critical questions on what the potential risks are for democratic societies, potentially challenging one’s understanding of truth, representation, and the role of technology in politics. Such consequences make it necessary for you to protect yourself from the harmful impacts of AI.

Ultimately, protecting yourself from AI harm primarily falls on you, requiring you to exercise more caution and be able to decipher the truth from falsehoods, especially during political campaigns. You will want to look for confirming information from multiple sources, critically evaluating information and checking sources.

Generative AI amps up the risk of disinformation that requires you to use proven practices for evaluating content, such as seeking out authoritative context from credible independent fact-checkers for images, video, and audio, as well as unfamiliar websites.

With generative AI already being used in the 2024 presidential campaign to mislead and deceive voters, it is critical to avoid getting election information from AI chatbots and search engines that consistently integrate generative AI. To protect yourself, go to authoritative sources like election office websites, as well as experts in the area.

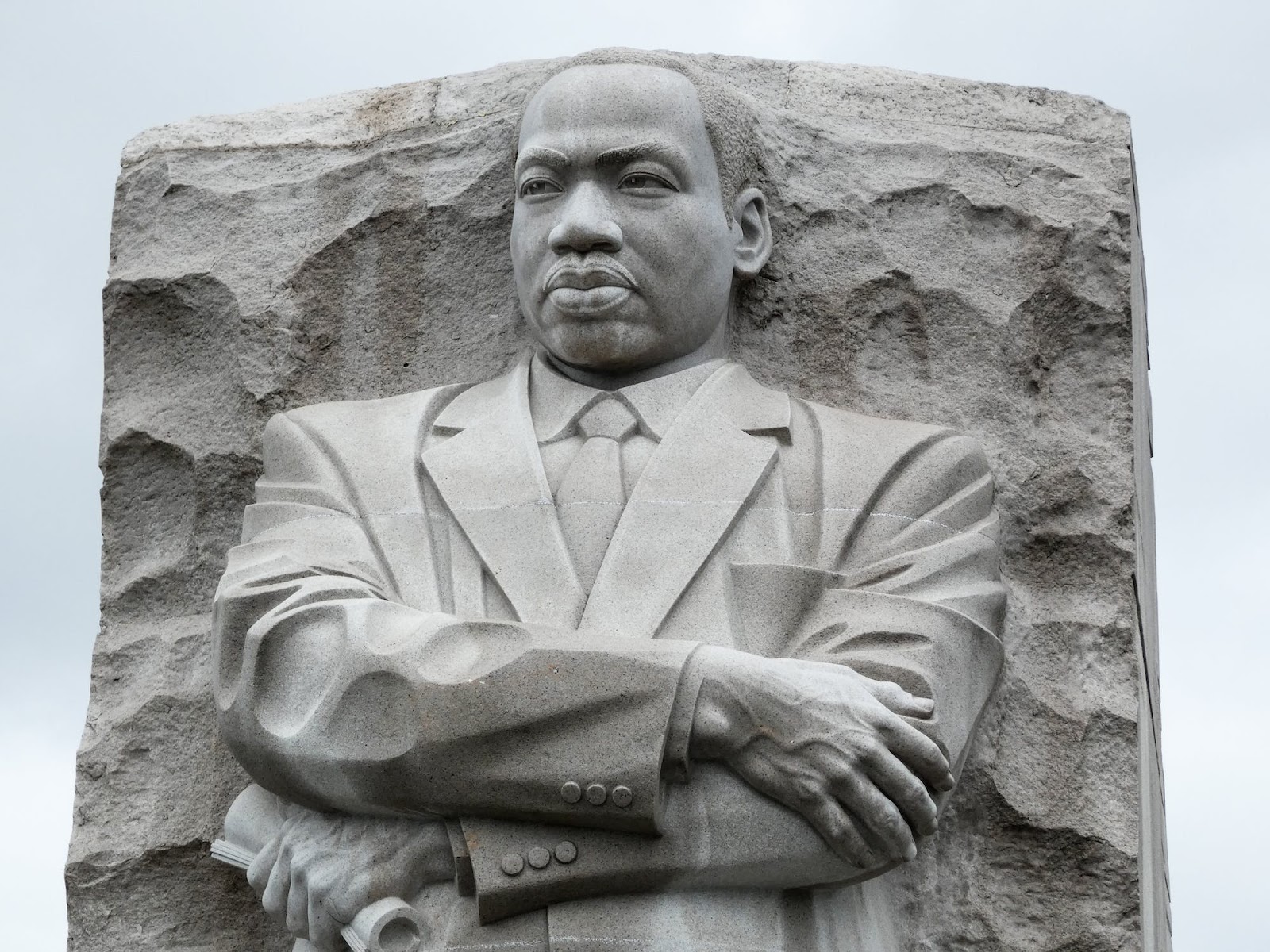

Dr. Lance Y. Hunter, a Professor of International Relations at Augusta University, is an expert in security studies and the democratization effects of emerging technologies like AI. With his research focused on the complex relationships between technology, democracy, and terrorism, Dr. Hunter is an ideal expert to discuss the implications of AI’s influence on the political landscape and its potential effects on global democratic processes.

Social media plays a key role in transmitting disinformation that can impact democracy. Dr. Hunter reported that social media disinformation can manifest in online political polarization, as well as the use of social media to organize offline violence, which reduces the overall levels of democracy.

Disinformation circulating on social media platforms is something you can help limit by maintaining vigilance, staying critical about the information you are taking in, and exercising responsibility when sharing political content that may have been generated by AI, especially during periods of heightened sensitivity like the election.

By staying informed, critical, engaged in civil discourse, and advocating for transparency and accountability, you can help shape the virtual ecosystem to ensure safety and trust are in place.