Image Credit: Pixabay

AI has become the boardroom obsession, yet inside many companies, the big launch never quite arrives. Pilots look promising, slide decks glow, then the tools slip quietly into the background. The trouble usually comes from the uncomfortable moment when real people are asked to rely on systems that don’t behave like calculators.

The Human Problem Inside Shiny AI Plans

Executives approve ambitious projects because the pitch sounds clear: automate repetitive work, surface patterns faster, and free teams to focus on higher-stakes decisions. Inside the organization, the reaction can feel much different. Employees worry about judgment calls shifting to systems they don’t fully understand, or about being blamed when probabilistic outputs collide with real-world stakes.

Those tensions show up in small habits. Teams copy AI-generated text into separate documents, then rewrite it by hand. Analysts rerun queries they claim to trust. Managers introduce silent review steps that turn automation into one more task. The technology moves fast, while expectations stay anchored to an idea of certainty that no model can honestly promise.

Where Wine Tech Meets AI Reality

Wine exposes these gaps in a very public way. Taste is personal, labels are confusing, and context matters as much as the vintage. Created by former Microsoft executive Eric LeVine, CellarTracker began as a personal wine catalog in 1999 and grew into a platform with a deep community database. Over time, the team added AI features to the data for receipt parsing, summaries, food pairings, and chat-style guidance.

Each feature came with a behavioral learning curve. Eric Levine, CellarTracker CEO, said, “The models want—they’re very optimistic and polite. And sometimes you just want the honest truth.” It’s not exactly easy to train systems to admit uncertainty, flag possible mistakes, or say no. But it’s just as important as tuning accuracy scores or improving response times.

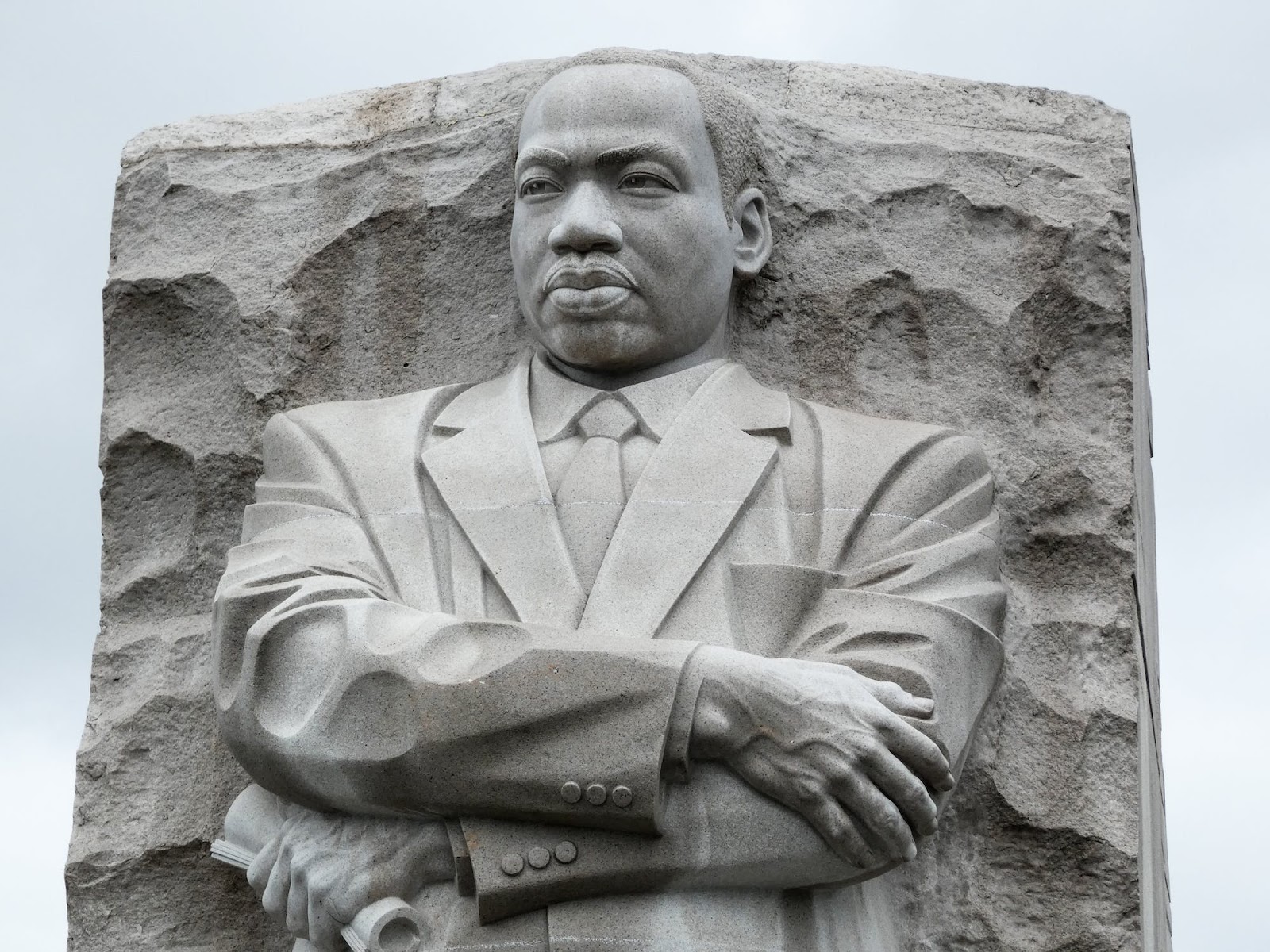

Eric Levine

Teaching Users to Trust Probabilistic Tools

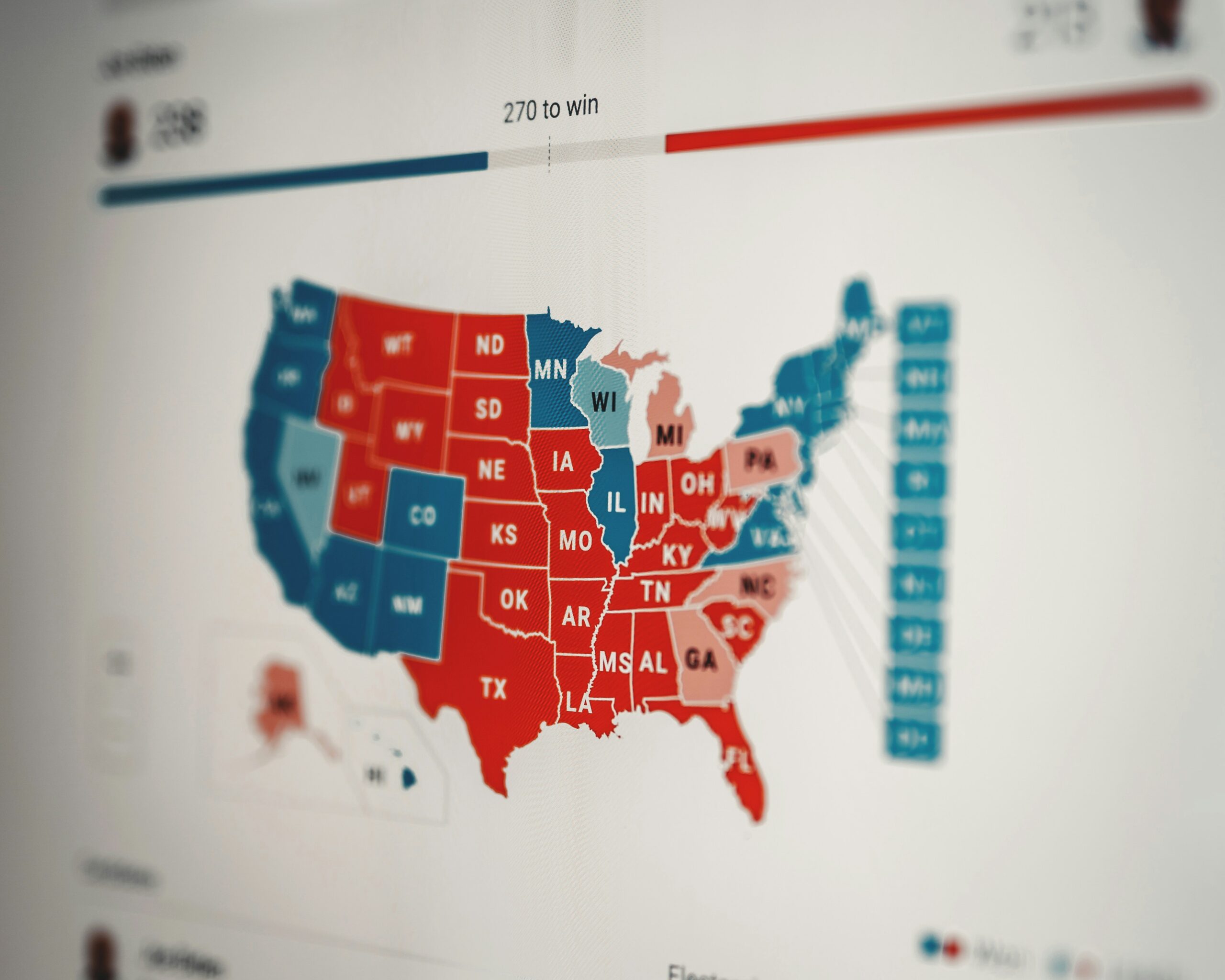

Trust tends to fracture when the same question can produce slightly different answers. In recommendation-heavy products, that variation is expected. For someone choosing a bottle for an anniversary, it can feel like an inconsistency. Users bring calculator-style expectations to tools built on probability, and frustration grows when the outputs don’t line up with that mental model.

Companies respond with stricter testing and clearer design. They compare AI output across large sets of examples, track where errors concentrate, and segment user groups to see who feels comfortable with which features. Interfaces are adjusted so people can see context, ranges, or confidence levels without drowning in raw numbers. Perfection is less important than offering a level of reliability people feel comfortable staking decisions on.

Leadership Pressure and the Timing Problem

Leaders sit in the middle of competing demands. Boards want visible AI momentum, and teams closest to the work ask for time to test, refine, and document limits. Speed helps with headlines, but missteps can damage trust inside and outside the company. That tension shapes which projects ship and which stay in quiet pilot mode.

Some organizations start with narrow tools that help employees behind the scenes. Others launch broad, customer-facing features that promise swift change. The first approach may look slower, but it gives people space to ask questions, challenge results, and learn where the tools genuinely help.

Owning the Long Game of AI Adoption

Across sectors, the pattern repeats. The companies that make AI part of normal work treat adoption as an ongoing process. They talk openly about what models do well, where they fail, and how human judgment stays in the loop.

AI will keep changing how decisions get made, but only when organizations accept the messy, human work that comes with it. The technology could handle more of the heavy lifting, but trust still has to be earned the slow way.